Pointing Gesture Recognition

Among the set of gestures intuitively performed by humans when communicating with each other, pointing gestures are especially interesting for communication with robots. They open up the possibility of intuitively indicating objects and locations, e.g., to make a robot change direction of its movement or to simply mark some object. This is particularly useful in combination with speech recognition as pointing gestures can be used to specify parameters of location in verbal statements (“put the cup there!”).

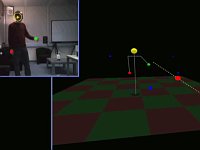

Our pointing gesture recognition system classifies the 3D-trajectories of the user's hands by means of Hidden-Markov-Models. Once a gesture is detected, the pointing direction is extracted from the peak of the gesture. We also experimented with head orientation as an additional feature for pointing gesture recognition. As people tend to look at what they point, head orientation information was found to be an important cue to reduce the number of false positives.

Selected Publications

- Kai Nickel, Rainer Stiefelhagen. Visual recognition of pointing gestures for human-robot interaction, Image and Vision Computing, Elsevier, Volume 25, Issue 12, 3 December 2007, Pages 1875-1884. (pdf)

- Kai Nickel, Edgar Seemann, Rainer Stiefelhagen. 3D-Tracking of Heads and Hands for Pointing Gesture Recognition in a Human-Robot Interaction Scenario. 6th Intl. Conference on Face and Gesture Recognition, May 2004, Seoul, Korea. (pdf)

- See publications page for more!